In the past month there have been several interesting developments related to quantum computing:

Spoofing random circuit sampling

Claims of quantum supremacy via random circuit sampling are troublesome because it is so difficult to verify that the quantum processor is working correctly. The Google team used cross entropy benchmarking to verify their original experimental demonstration. The cross entropy benchmarking fidelity is basically a measure of the probability of observing a sequence of bitstrings based on the simulated output probabilities of a circuit. One criticism of this approach is that this fidelity cannot be computed for circuits operating in the classically-intractable quantum supremacy regime. The authors were limited to computing the fidelity for smaller, classically-tractable circuits and comparing its scaling to the expected gate error rates. This approach has several issues explained clearly in a blog post by Gil Kalai discussing a preprint from last year, Limitations of Linear Cross-Entropy as a Measure for Quantum Advantage.

New attacks of the cross entropy benchmarking fidelity have now appeared as arXiv preprints:

Changhun Oh and collaborators have come up with a method for spoofing the cross entropy benchmarking fidelity in recent Gaussian BosonSampling experiments.

Dorit Aharonov and collaborators show that samples from noisy quantum circuits can be computed classically to a good approximation in polynomial time.

Classical shadows and bosonic modes

Classical shadows continue to be a highly fruitful avenue for a potential near-term quantum advantage. Hsin-Yuan Huang and collaborators have now shown how classical shadows can be used to predict arbitrary quantum processes. To be precise, they give a procedure for estimating observables of a quantum state $\rho$ following application of a completely positive trace-preserving map (describing e.g. evolution of a quantum state in the presence of environmental noise).

When we first become interested in classical shadows almost a year ago a natural question that arose was whether the qubit-based formalism could be translated to bosonic systems. This turns out to be a hard problem because the proof of optimality of shadow methods rests on properties of finite-dimensional vector spaces which do not easily translate to the infinite-dimensional continuous variable quantum systems, discussed in The Curious Nonexistence of Gaussian 2-Designs.

A team at NIST/University of Maryland now appears to have solved this problem. First, they show that the non-convergent integrals arising in the continouous variable case can be avoided by considering "rigged t-designs" obtained by expanding the Hilbert space to include non-normalizable states. Experimentally-meaningful predictions using this approach can be obtained by regularizing the designs to have a finite maximum or average energy. In a second study the team recasts existing bosonic mode tomography approaches to the classical shadow language to obtain bounds for estimating unknown bosonic states. In practice, however, homodyne tomography performs significantly better than the bounds obtained using classical shadows. These works open up further studies and applications of shadow-based methods to bosonic systems.

Edit: Another paper on continuous variable shadows appeared today: arXiv:2211.07578

IBM's Osprey 433-qubit quantum processor

Last week IBM made several announcements as part of their annual quantum summit, including their new 433-qubit Osprey device. Olivier Ezratty posted a great hype-free analysis of IBM's marketing announcements. In short, large two-qubit gate errors make such large qubit counts useless at this stage, but the advances in integration of the classical control electronics are an important step forward. IBM is still in the lead with the development of superconducting quantum processors. It is also nice to see that they are working towards integrating error mitigation techniques into their software. At present cloud quantum computing providers release devices with atrocious error rates (coughRigetticough), which requires users to spend significant time and money implementing error mitigation techniques in the hope extracting something useful out of their devices.

Bad news for NISQ?

Sitan Chen and collaborators analyze the capabilities of NISQ devices using tools from complexity theory, obtaining a complexity class lying between classical computation and noiseless quantum computation. Their model rules out quantum speedups for certain algorithms including Grover search that are run on near-term noisy quantum devices.

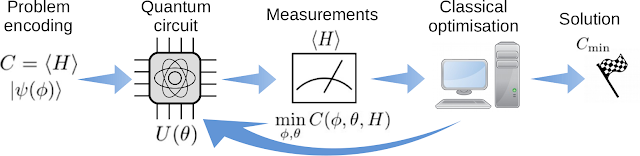

Variational quantum chemistry requires gate-error probabilities below the fault tolerance threshold. The authors compare different variational quantum eigensolvers. Gate errors severely limit the accuracy of energy estimates, making minimizing circuit depths of prime importance. Thus, hardware-efficient ansatzes are preferred over deeper physically-inspired variational circuits. Regardless, supremely low error rates seem to be needed to reach chemical accuracy.

Exponentially tighter bounds on limitations of quantum error mitigation. Quoting from the abstract, "a noisy device must be sampled exponentially many

times to estimate expectation values." At first glance, this is good news for cloud quantum processor providers - simply by making your qubits noisier, you can increase your revenue exponentially! However, "there are classical algorithms that

exhibit the same scaling in complexity," so classical cloud computing providers will give you much much much better value for money. "If error mitigation is used, ultimately this can

only lead to an exponential time quantum algorithm with a better exponent,

putting up a strong obstruction to the hope for exponential quantum speedups in

this setting."

Towards fault tolerant quantum computing

Daniel Gottesman has a relatively accessible survey on quantum error correcting codes. I was surprised to see that the problem of quantum error correction has not been reduced to an engineering challenge of building bigger devices with the required fidelities; work on understanding the properties and developing better error correction methods more robust against different error types is still an active area of research.

Quantum error correcting codes typically assume that errors are uncorrelated in time and space. In the presence of correlated errors at best you require a lower error rate for quantum error correction to work, and at worst the accumulation of uncorrectable errors will make the result of the computation useless. The Google team have put out a preprint aimed at reducing correlated errors arising from the excitation of higher-order states in their superconducting qubits.